PROCESS

Step 1: Image Generation

The foundation of the process is generating images, to set the visual tone for each reel. The goal of this step is to create a set of images that can be animated, edited and refined into a cohesive video.

At the start of AI Slop, SDXL was used for direct injection of images into AnimateDiff workflows in ComfyUI. As the project evolved, Midjourney became the primary tool for its speed and ability to refine personal aesthetics. Flux was released, and briefly explored mid-project but wasn't fully integrated.

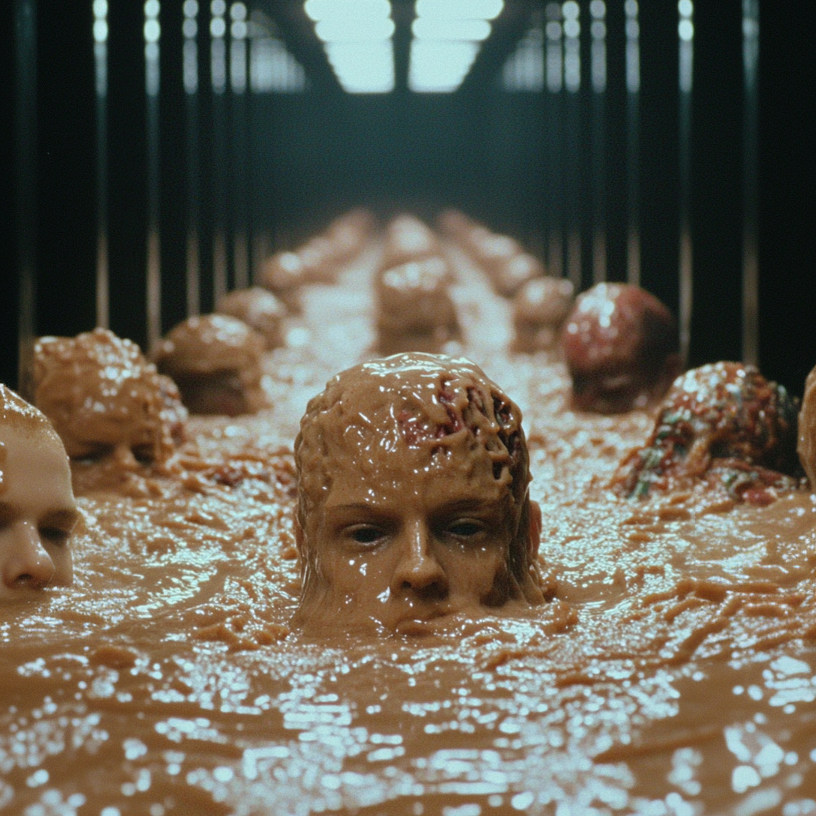

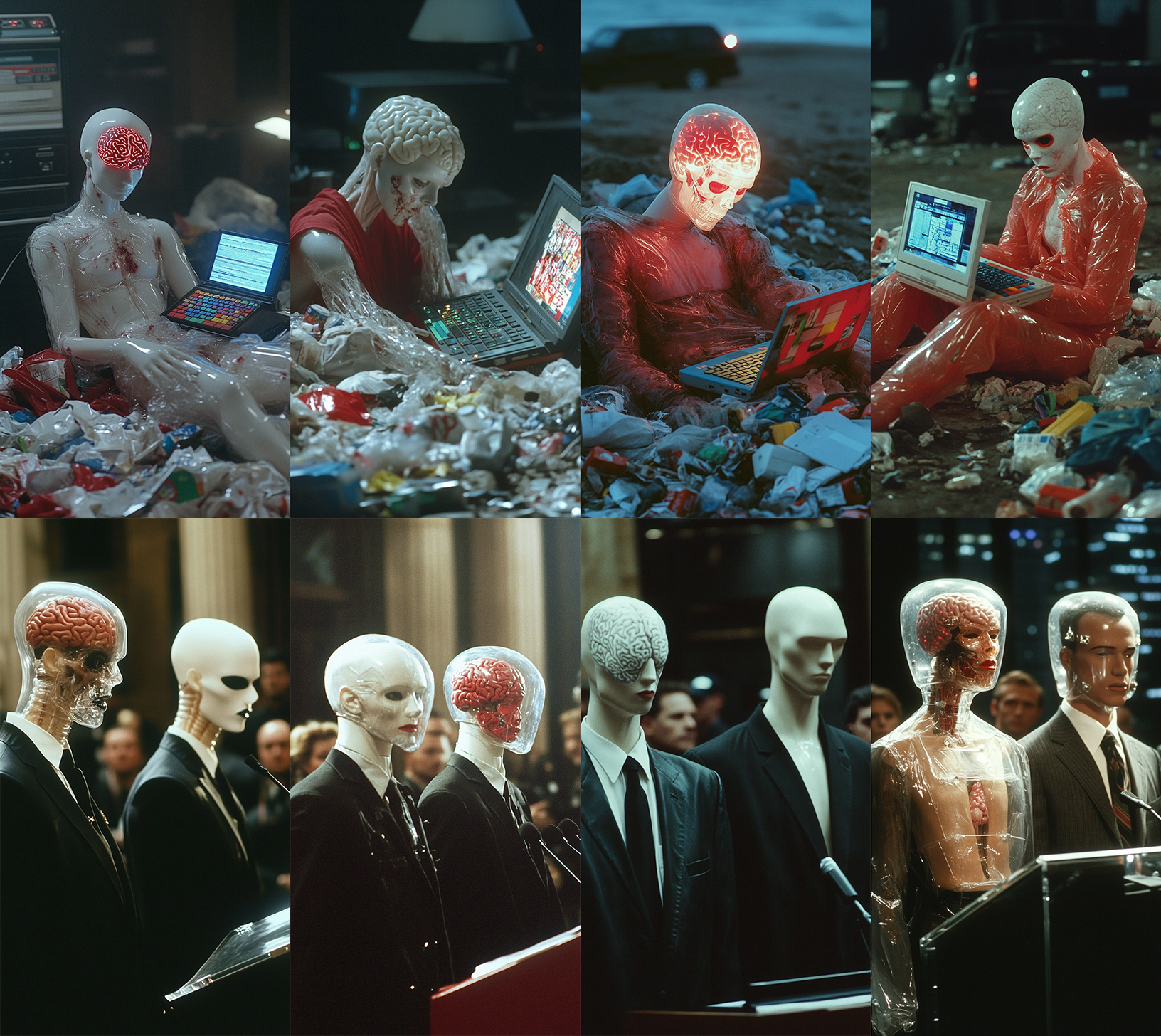

Midjourney is one of the most accessible image generators, but it also offers a surprisingly deep suite of tools for power users. One of the most powerful features is aesthetic personalization, which allows users to refine output to align with their artistic taste. This feature activates after providing enough image rankings, gradually tilting results toward personal preferences. After ranking hundreds of images, outputs during AI Slop evolved into a distinct retro-dystopian aesthetic.

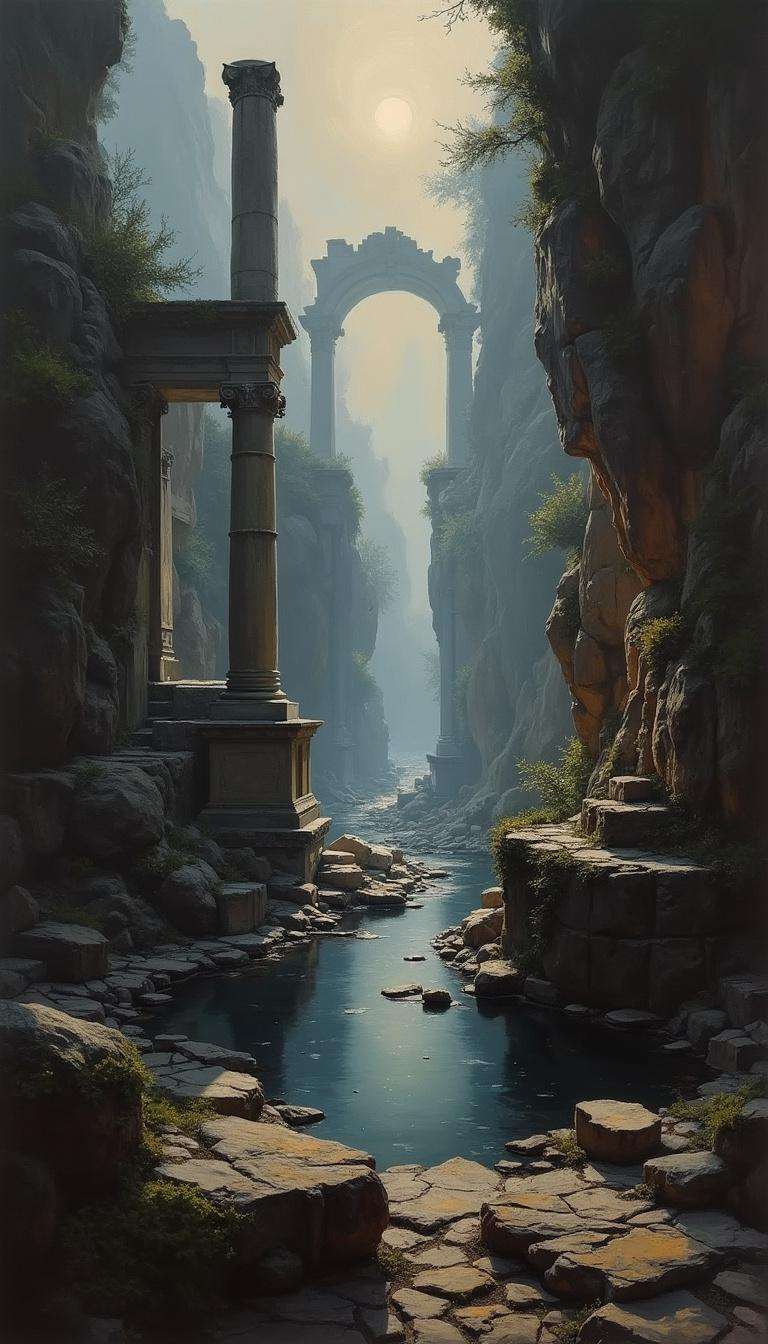

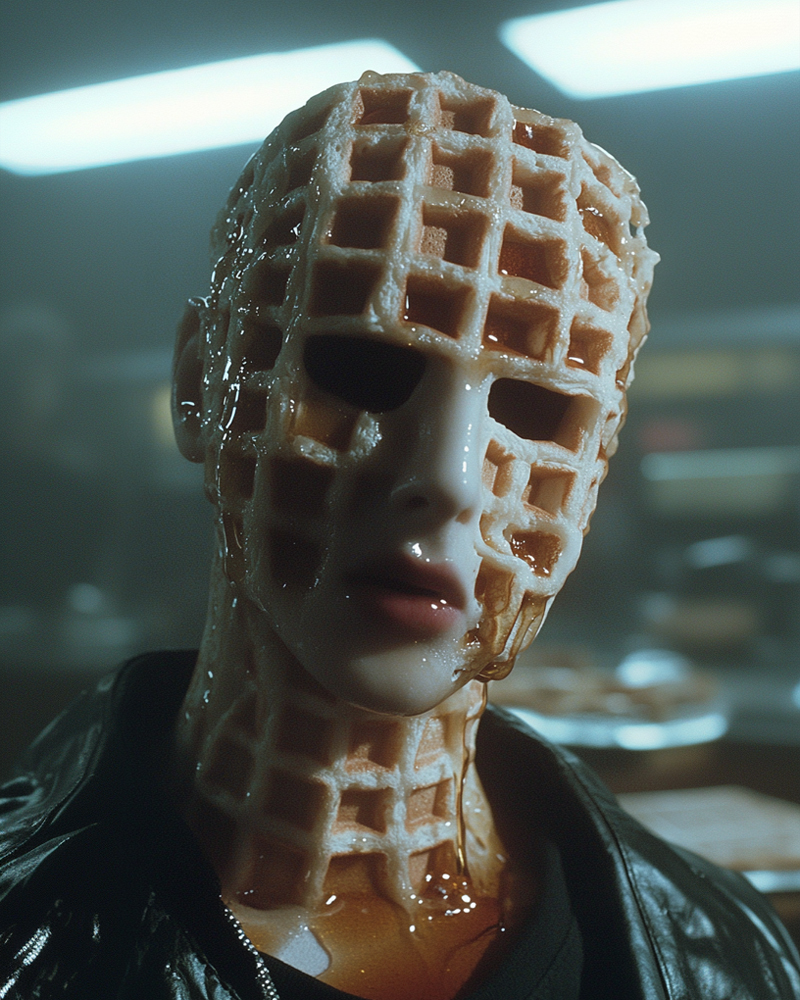

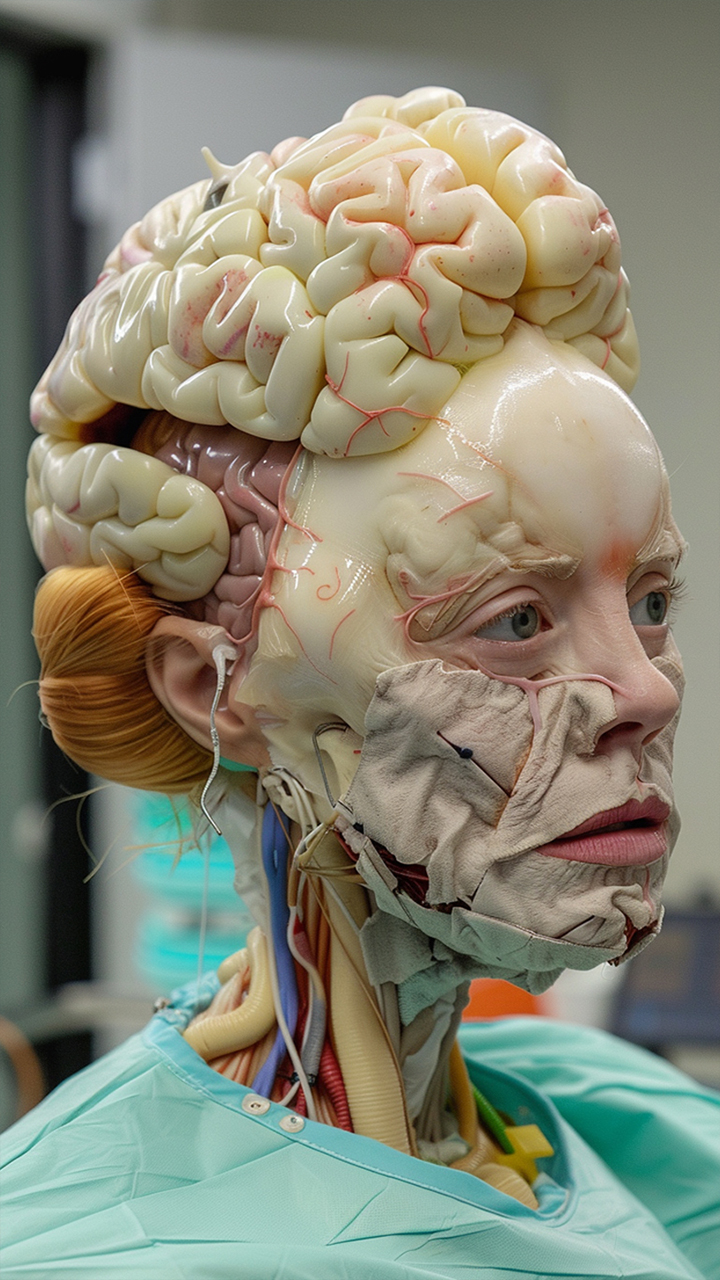

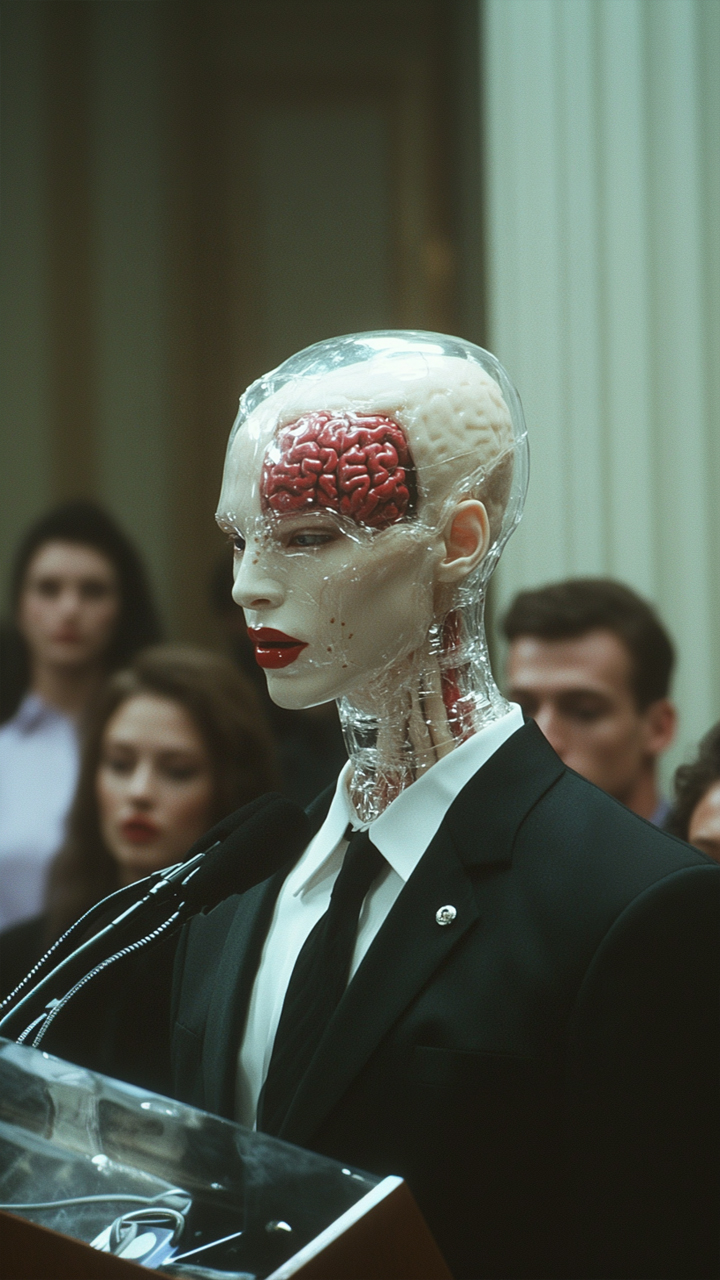

Fig 2.1: Default Midjourney outputs

Fig 2.2: The same outputs after aesthetic personalization

An aspect ratio of 9:16 is optimal for Instagram reels. For any given reel, about 40-50 images were generated. Zoomed out variants on several images were also generated, to allow for zoom-cuts in the edit.

Fig 3.1: Base image zoomed out by a factor of 1.5x twice

Fig 3.2: Zoomed-out variants offer flexibility in post-production

Step 2: Video Generation

With a full set of images prepared, the next step was to transform them into video clips.

AI Slop initially used AnimateDiff running locally in ComfyUI before migrating to commercially available img2vid tools including Luma Dream Machine, Runway Gen-3, and KlingAI.

KlingAI emerged as the main tool, delivering consistent quality with the ability to generate multiple concurrent clips. Luma Dream Machine provided quick generation but (at the time) sometimes produced static camera movements rather than depth-aware scenes with animated characters. Despite its capabilities, Runway Gen-3 wasn't incorporated into any final reels due to its lack of support for portrait orientation.

For each reel, between 30-50 video clips (5 seconds each) were generated, providing sufficient material for editing.

Fig 4: For 'coney island', 42 video clips were generated using Kling v1.5

Step 3: Music Generation

With all visual elements prepared, music became the next step in the process. Early reels incorporated tracks licensed from Instagram's catalog, but throughout the project, AI audio generation tools became increasingly core to the workflow. Two primary tools emerged as essential: Suno and Udio.

For each reel, the process typically involved generating around 10, but sometimes up to 30 different musical tracks, eventually shortlisting 2-5 of the best options for the final edit. This approach provided enough variety to find the right sonic match for each visual sequence while maintaining efficiency.

In-N-Out vs Shake Shack (September 18)

Coney Island (September 12)

Fig 5.1: Signature Tracks from Suno (Click to Play)

A Guacalypse (September 14)

Macaroni Business (September 21)

Fig 5.2: Signature Tracks from Udio (Click to Play)

Importantly, all music generated for AI Slop was entirely instrumental. This deliberate choice eliminated the complexity that vocals would have introduced to a workflow optimized for speed and consistency.

Step 4: The Edit

With all audiovisual elements prepared, editing brings everything together into the final product. The editing process was done "the old fashioned way", the only part of the workflow not using automation or GenAI.

The edit begins with importing all generated video clips and shortlisted music tracks into Adobe Premiere Pro using a standard 1080x1920 template optimized for Instagram Reels at 60fps.

Fig 6: For 'coney island', 3:35 of footage was cut down to 24 seconds

First comes a series of "listening sessions" where the video clips are played alongside each shortlisted music track to identify which audio and visuals complement each other. After listening to the various options, one music track emerges as the foundation for the final edit.

Once the track is selected, the sound edit takes priority. This involves identifying and extracting the most compelling section of music, typically two four-bar loops or a 20-30 second segment that pairs with the visuals. The music creates the backbone for the entire edit, with cuts placed on musical beats, typically at the start of bars or half-bars to maintain rhythm.

Every video clip is watched, then sorted based on visual interest. High-interest clips with the strongest visual impact bookend the loop at the start or end of the sequence, medium-interest clips make up the body of the edit, and the rest are used in transitions or are cut entirely.

The AI video generators tended to generate unnaturally slow or wandering camera movements, so each clip was individually reviewed and typically sped up to 1.5-2x its original speed to create the illusion of more natural movement.

The edit was then continually iterated, testing each cut for flow, pacing and implied narrative. Subtle motion effects were added through the addition of Ken Burns style zooms, and cuts to match with audio half-measures. Color grading and image enhancement finished the edit. Sharpen, Unsharp Mask and Noise effects were added to every clip, and Lumetri in Premiere was used to color grade and match the shots prior to posting.

Step 5: Final Output

With the edit complete, the final step was to prep output for social media. Reels were exported at 1080x1920 resolution, 60fps using H264 format with AAC audio and 2-Pass Variable Bit Rate encoding at maximum quality.

Final enhancement was done in Topaz Video AI, applying both frame interpolation and image enhancement models. This process added another layer of polish to improve fidelity and fluidity.

Fig 7: original 30fps on the left, enhanced 60fps with frame interpolation and enhancement on the right. from 'artisanal intelligence' - September 17, 2024

This process took about 10 minutes to compute locally on a 4090-equipped workstation, or about 3 hours on a MacBook Pro while on the road. Once completed, the reel was ready to post.